This game is not releasing soon. Apart from the prototype I made last year, the game is still in pre-production. Cube Royale and VizionEck Adventure are both scheduled to release first. However since this game is so unique and isn't story focused, I'm opening up development and sharing every step of the journey from beginning to end.

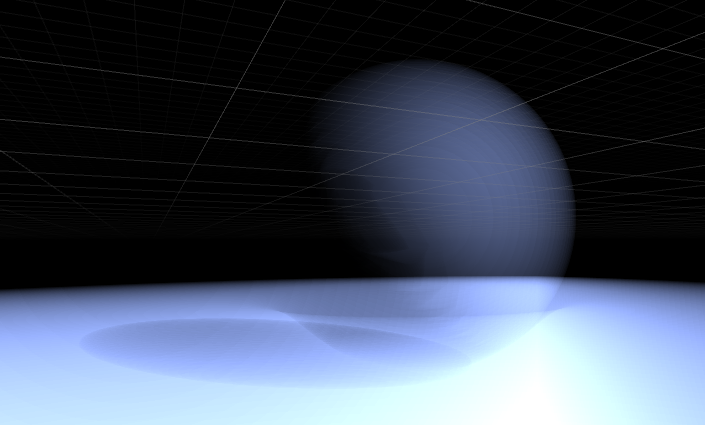

"Imagine a beautiful and surreal 4D island that you are free to explore at your own pace. Things aren't always peaceful though, as the island is inhabited by ~12 legendary beasts. Embrace your destiny and use swords, shields, and arrows to defeat them."

The fundamental goal is to make a game that is NOT focused on the fourth dimension. The game's story does not involve dimensions and the gameplay does not revolve around dimensions. Let me explain this a little bit.

We live in 3 spatial dimensions. It is considered impossible for us to see 4 spatial dimensions, so it's common to think of the fourth dimension as something extra and unique that's off to the "side" of our 3D world. There are lots of cool movies, games, and books with this approach. Fundamentally however they are not full 4D. They are 3.5D in the same sense that LittleBigPlanet and other similar games are 2.5D. The fundamental goal of this game is for it to be truly full 4D and let players see directly in 4D. If 4D humans existed and they had 4D video game consoles, this game without modification would be just a normal game for them.

It's delightfully counterintuitive but full 4D makes the game more approachable too. Our brains are really good at interpreting 2D images as 3D scenes and this carries over to 4D scenes. A player can pick up a controller and understand motion instantly. Ana/kata movements don't map to 3D motion but it's still easy enough to understand them as a game mechanic. Basic rotations are also trivial to understand. Complex rotations are the outlier and take a long time to get used to.

Frequently Asked Questions

Q: How can a true 4D game be seen?

A: A 4D camera renders 3D blocks of pixels. There is no perfect way to present these 3D pixels so the game has multiple methods that the player can choose from. This includes intuitive methods like VR and non intuitive methods like space filling curves.

Q: Is this game only for people that like math?

A: This is not a math game. The target audience is people that just want a cool indie game with swords, monsters, and puzzles.

Q: What if I only like math?

A: The game is open in nature. You can avoid combat and explore the 4D environments.

Q: Is the game called "4D video game?"

A: No. I still need to figure out an actual name for it.

Q: What does it play like?

A: Shadow of the Colossus in 4D is a pretty apt comparison. The environments will have a bigger focus however since 4D trees, mountains, rivers, shores, etc. are so incredibly interesting.

This is just the first version of this OP. I'll probably overhaul it in the coming months once I have screenshots to share.

"Imagine a beautiful and surreal 4D island that you are free to explore at your own pace. Things aren't always peaceful though, as the island is inhabited by ~12 legendary beasts. Embrace your destiny and use swords, shields, and arrows to defeat them."

The fundamental goal is to make a game that is NOT focused on the fourth dimension. The game's story does not involve dimensions and the gameplay does not revolve around dimensions. Let me explain this a little bit.

We live in 3 spatial dimensions. It is considered impossible for us to see 4 spatial dimensions, so it's common to think of the fourth dimension as something extra and unique that's off to the "side" of our 3D world. There are lots of cool movies, games, and books with this approach. Fundamentally however they are not full 4D. They are 3.5D in the same sense that LittleBigPlanet and other similar games are 2.5D. The fundamental goal of this game is for it to be truly full 4D and let players see directly in 4D. If 4D humans existed and they had 4D video game consoles, this game without modification would be just a normal game for them.

It's delightfully counterintuitive but full 4D makes the game more approachable too. Our brains are really good at interpreting 2D images as 3D scenes and this carries over to 4D scenes. A player can pick up a controller and understand motion instantly. Ana/kata movements don't map to 3D motion but it's still easy enough to understand them as a game mechanic. Basic rotations are also trivial to understand. Complex rotations are the outlier and take a long time to get used to.

Frequently Asked Questions

Q: How can a true 4D game be seen?

A: A 4D camera renders 3D blocks of pixels. There is no perfect way to present these 3D pixels so the game has multiple methods that the player can choose from. This includes intuitive methods like VR and non intuitive methods like space filling curves.

Q: Is this game only for people that like math?

A: This is not a math game. The target audience is people that just want a cool indie game with swords, monsters, and puzzles.

Q: What if I only like math?

A: The game is open in nature. You can avoid combat and explore the 4D environments.

Q: Is the game called "4D video game?"

A: No. I still need to figure out an actual name for it.

Q: What does it play like?

A: Shadow of the Colossus in 4D is a pretty apt comparison. The environments will have a bigger focus however since 4D trees, mountains, rivers, shores, etc. are so incredibly interesting.

This is just the first version of this OP. I'll probably overhaul it in the coming months once I have screenshots to share.